The Future of Personal Agency (Influencing One’s Own Actions in the Age of AI), 2040’s Ideas and Innovations Newsletter, Issue 102

Issue 102, April 6, 2023

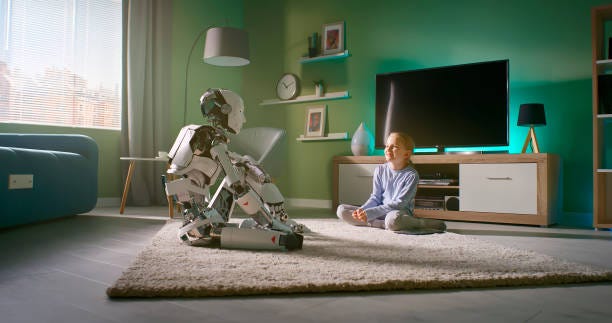

It can’t come as a surprise that many people feel they are losing their sense of personal agency in the face of such rapid technological change, global disruptions and polarized social and political factions. Personal agency? It’s a “sense that I am the one who is causing or generating an action. A person with a sense of personal agency perceives himself/herself as the subject influencing his/her own actions and life circumstances,” according to Springer. And Wiki defines self-agency that is also known as “the phenomenal will” — the sense that actions are self-generated. Scientist Benjamin Libet was the first to study it, concluding that “brain activity predicts the action before one even has conscious awareness of his or her intention to act upon that action.” And that ability to predict is precisely how we have created AI, mimicking our brain function.

Keep reading with a 7-day free trial

Subscribe to 20Forty’s Newsletter to keep reading this post and get 7 days of free access to the full post archives.